Heuristics

Constructive search needs to explore an exponentially large search space. In order to have any hope to find a solution, or prove that none exist, it is crucial that this exploration is done in a smart way. Problems that constructive search is applied to are NP-complete, so we don’t have an exact way of making smart decisions about this exploration. Instead, we rely on heuristics to make generally good decisions, that will hopefully help us either find a solution, or prove that one does not exist quickly.

There are two categories of these heuristics - variable ordering heuristics, and value ordering heuristics. Let’s take a closer look at how they work.

Decisions, decisions …

You may recall this little bit of code from an earlier post where we covered the search algorithm:

s.current.decision = s.makeNextDecision()This call to makeNextDecision() is made whenever we enter a new node in the search tree. To give a quick reminder, at this point we may have made other decisions at the ancestor nodes in the search tree and are exploring some subspace of the overall search space. We’ll keep making decisions until we either find a solution, or exhaust the possibilities. Also, as another quick reminder, when I say “make a decision”, I am referring to picking an unassigned variable and a value from its live domain to try next. You can check out the older posts if you need a refresher on live domains, constraint propagation and solver basics in general.

For most non-trivial problems it becomes important to make good decisions, or our search can run exponentially long trying to find a solution, while also thrashing and unable to prove that no solutions exist. A quick observation is that at any point during the search we are either in a search space that contains a solution, or does not. This distinction, while obvious, can be useful when talking about decision making; i.e., heuristics:

For most non-trivial problems it becomes important to make good decisions, or our search can run exponentially long trying to find a solution, while also thrashing and unable to prove that no solutions exist. A quick observation is that at any point during the search we are either in a search space that contains a solution, or does not. This distinction, while obvious, can be useful when talking about decision making; i.e., heuristics:

- When search lands in a subspace that has no solutions, good heuristics let it quickly confirm that no solutions exist, so that search can move else sooner. Such subspaces are called infeasible.

- When search lands in a subspace that does have solutions, good heuristics lead search towards those solutions. These subspaces are called feasible.

Decisions involve choosing a variable and a live value to try out, and similarly heuristics are commonly viewed as two parts - one type for choosing a variable, and another to choose a value. They are called variable ordering and value ordering heuristics, respectively.

Variable ordering heuristics

Let’s glance at the two states search can be in again:

- Subspace contains a solution: we’ll need to assign all variables to get to the solution, but if the one we pick is assigned to a value that doesn’t land on a solution, we’ll want to prove infeasibility quickly.

- Subspace that does not contain a solution: we simply want to prove infeasibility quickly.

Variable ordering heuristics have no control over values, so their goal is simple, how can we determine whether the current subspace has no solutions, quickly? There are a slew of variable ordering heuristics, but the good news is that they all appear to fall under the same category - fail fast. The idea is, we have to pick some variable to assign, and if we are in infeasible space, picking a variable that lets us fail fast is best, as we can move on to another subspace quickly. Interestingly, this principle is adopted elsewhere, like systems design, agile methodologies, and probably a few other things unrelated to CSPs. Back to CSPs and constructive search though: the most basic flavor of this technique is to choose a variable with fewest live values left in its domain, and the rationale is - if we’re infeasible, we’ll need to try all values, the fewer there are, the faster it will be to eliminate them.

There are quite a few flavors / improvements upon this most basic “fail fast” heuristic, so I’ll mention a few:

- dom/deg: in addition to picking the variable with fewest live values, we’ll also divide by the number of constraints the variable is involved in. The more constrained the variable, the more likely we are to choose it; the exact formula for picking the next variable is literally # of live values divided by # of constraints the variable is part of, and we pick the unassigned variable that gives the lowest number using this formula.

- dom/ddeg: similar to the above, but also accounts for dynamic nature of search (“ddeg” stands for “dynamic degree”) by only counting constraints that involve unassigned variables (that’s a slight oversimplification, see this for full details on this and lots more).

- dom/wdeg: extends dom/ddeg heuristic by also keeping track of when a constraint causes a wipeout of a variable, and using that as part of the evaluation/choosing the variable. For details, again, please refer to proper scientific articles like the above. This is the variable ordering heuristic used by my solver.

Value ordering heuristics

In my by now rather dated experience (decade plus… yikes), value ordering heuristics largely only matter when we are in feasible subspace, and their goal is to find the variable assignment that would lead search towards the solution. As many variables may still need to be assigned, another way of framing this is that a good value ordering heuristic will avoid landing us in infeasible search subspaces, which can be expensive to recover from.

You can see how value ordering heuristics are in some way opposite of variable ordering heuristics - they are more useful when in feasible spaces, and aim to find a solution, so it’s not too surprising that for value ordering heuristics to be effective they prefer selecting values that are more likely to succeed first, and defer choosing other values until later. General-purpose value ordering heuristics can achieve this by preferring values that essentially leave the problem least constrained. For classes of problems where estimating complexity of a subproblem can be done efficiently, a good value ordering heuristic can be obtained by preferring values that lead to sub-problems that are most likely to be solvable.

All that being said, there’s a simple and lazy (and somewhat unsatisfying) way out - it’s often fastest and simplest to just choose randomly. This tends to outperform other simple heuristics like lexicographic, and could be made deterministic for the sake of reproducibility by seeding the RNG. This is the value ordering heuristic used by my solver, albeit I’ve dabbled with specialized ones for crosswords, so far to no avail.

Choosing both together?

It may be useful to choose the variable and the value together, rather than separately, since we’ll need them both for making a decision.

Consider the following example:

- We have unassigned variables: A, B, and C.

- A and C have 3 live values in their domains, and B has 5.

- In this case, applying the “fail fast” variable ordering heuristic will result in a tie between A and C, with either of them being good candidates for being assigned next. Looking deeper at the potential values of each of these variables could be a useful way to break this tie. For example, it could be the case that one of A’s values could be particularly promising, so we may want to select A and that value together.

I’ve not seen this done in CSPs, but I wouldn’t be surprised if that happened more recently, or was done with other optimization approaches, like MIP or SAT. If you’re familiar with this, drop me a note (see social links at the top)!

Lexicographic ordering

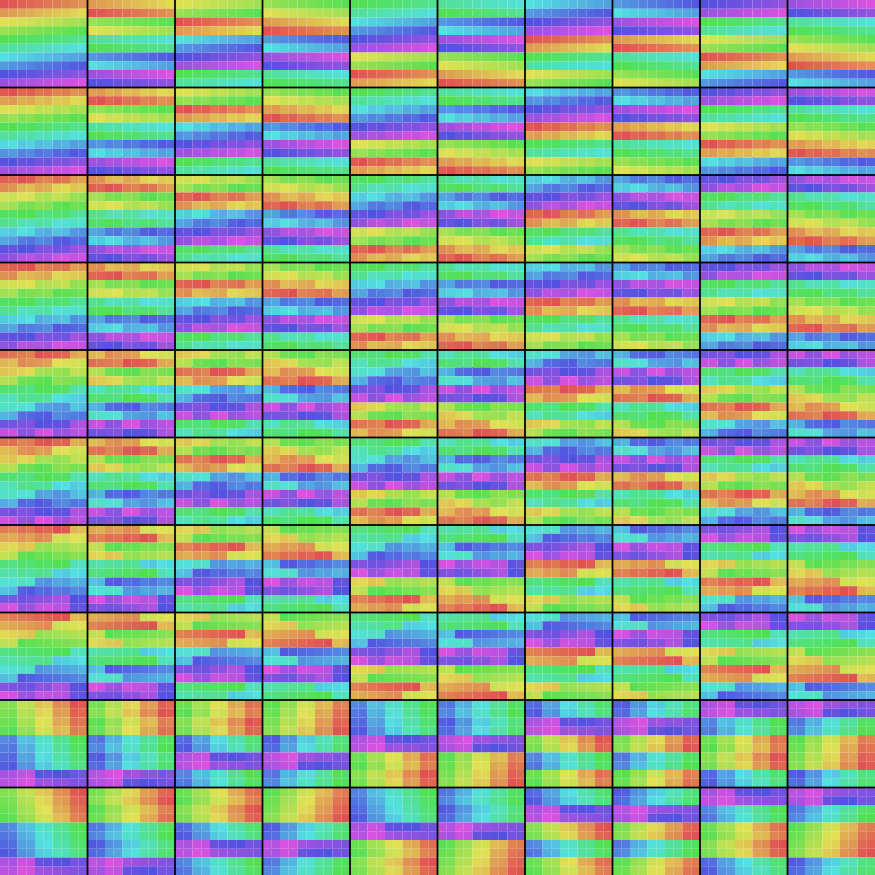

For both kinds of heuristics lexicographic ordering is primarily used to get a predictable and easy to understand run of the solver. That can be nice for basic sanity checking or toy problems, like the 4-queens problem from the earlier post. It can also lead to some fun visualizations, like the 100x100 sudoku below, but typically are of little use for hard problems.

This took about 1 hour to generate on my home machine. You can make a smaller one with lexicographic ordering using the the online version, in about a second.

This took about 1 hour to generate on my home machine. You can make a smaller one with lexicographic ordering using the the online version, in about a second.